Governance Strategies for Generative AI

As Generative AI reshapes industries, the challenge has shifted from model performance to responsible control. Organizations are now expected not only to innovate with AI but to prove that their systems are transparent, auditable, and aligned with global regulations.

Without structured governance, AI systems risk producing biased content, leaking sensitive data, or violating compliance frameworks like GDPR and the upcoming EU AI Act.

This guide explores governance strategies for generative AI — integrating policy, oversight, and automation to ensure trustworthy, compliant, and accountable AI operations.

Generative AI governance is not about restricting innovation — it’s about giving innovation a safe framework to thrive.

Understanding Generative AI Governance

AI governance defines how organizations control and monitor AI systems throughout their lifecycle — from data ingestion to model output.

For generative models, governance must cover data security, ethical use, and accountability across distributed environments.

Key governance questions include:

- Who has access to prompts, fine-tuning data, and model weights?

- How are AI outputs reviewed, logged, and corrected?

- Which policies ensure data compliance and prevent model misuse?

A governance framework creates traceability — every action, model decision, and data flow can be linked back to documented policy and technical control.

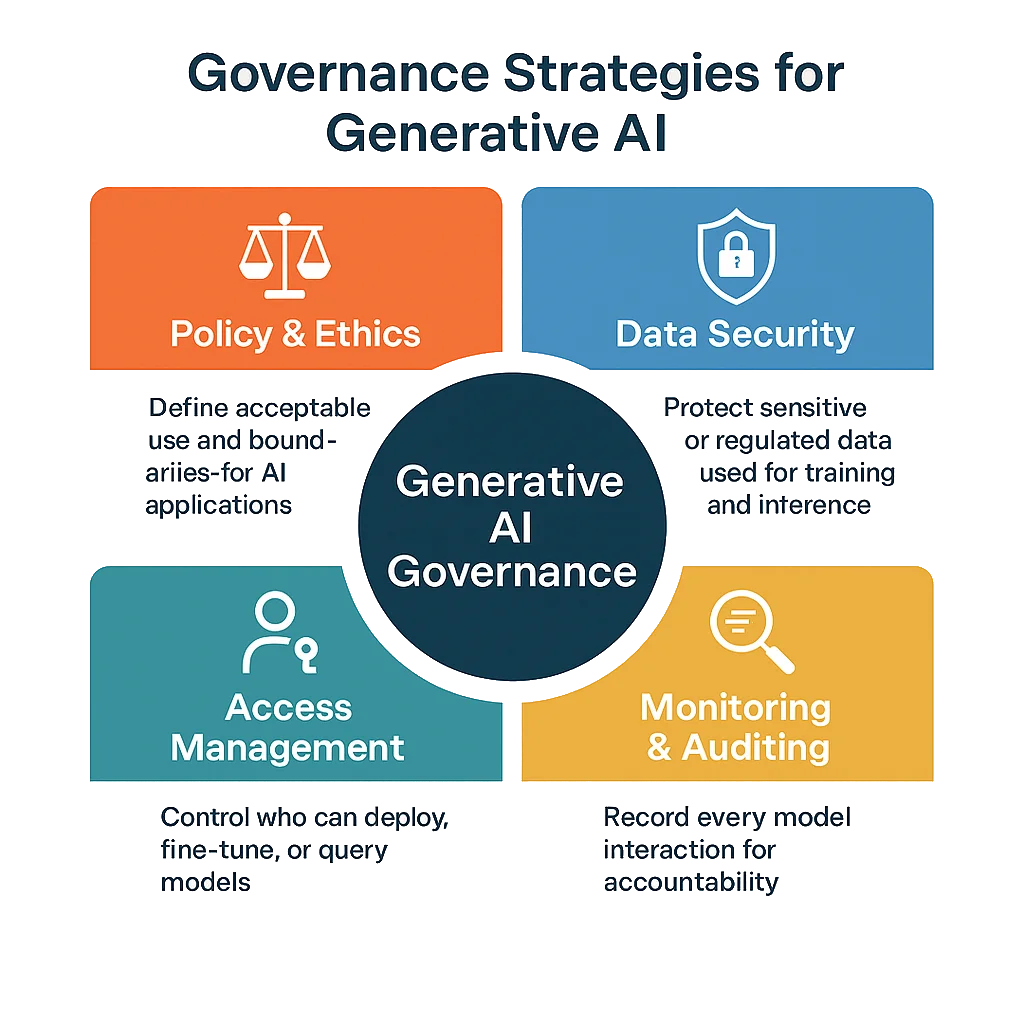

Core Pillars of Generative AI Governance

| Pillar | Objective | Example Controls |

|---|---|---|

| Policy & Ethics | Define acceptable use and boundaries for AI applications. | AI Code of Conduct, Responsible Use Guidelines |

| Data Security | Protect sensitive or regulated data used for training and inference. | Dynamic Data Masking, Encryption |

| Access Management | Control who can deploy, fine-tune, or query models. | Role-Based Access Control |

| Monitoring & Auditing | Record every model interaction for accountability. | Audit Logs, Activity Monitoring |

| Compliance Automation | Align with regional laws and industry frameworks. | Compliance Manager, GDPR, HIPAA, PCI DSS |

According to McKinsey Research, over 65% of enterprises deploying generative AI lack defined governance policies — making them vulnerable to untracked risks and regulatory exposure.

Governance Framework for Generative AI

A mature governance strategy integrates organizational policies with technical enforcement across five stages:

Data Lifecycle Control

- Discover and classify data with data discovery tools.

- Apply masking to personal identifiers before use in model pipelines.

- Log all data access for audit readiness.

Model Lifecycle Management

- Maintain model versioning, lineage, and approval workflows.

- Validate fine-tuning datasets for bias or copyright violations.

- Require cryptographic signing for approved models.

Access and Identity Governance

- Implement least-privilege access using RBAC.

- Review API tokens, roles, and permissions quarterly.

- Apply reverse proxy controls for secure traffic routing.

Operational Oversight

- Enable continuous behavior analytics to detect anomalous prompts.

- Integrate audit trails into SOC or SIEM systems.

- Use dashboards for model explainability and risk visibility.

Compliance Assurance & Reporting

- Automate evidence collection using DataSunrise Compliance Manager.

- Align policies with frameworks like ISO/IEC 42001 (AI Management System).

- Provide on-demand compliance reports for audits or executive review.

Combine technical safeguards with policy enforcement — governance fails if either side operates in isolation.

Governance Implementation Checklist

| Step | Description | Tools / Techniques |

|---|---|---|

| 1. Define Governance Charter | Establish scope, ownership, and compliance goals. | Policy documents, AI risk register |

| 2. Classify & Protect Data | Discover, tag, and mask sensitive data before model access. | Data Discovery, Masking |

| 3. Secure the Model Lifecycle | Versioning, approval, rollback, and signing for models. | CI/CD policy gates, signing tools |

| 4. Monitor Activity | Capture all model queries and responses. | Activity Monitoring, Audit Logs |

| 5. Automate Compliance Evidence | Generate proof of control adherence. | Compliance Manager |

| 6. Review and Improve | Conduct regular AI risk assessments and red teaming. | Behavior Analytics, AI Red Teaming |

Real-World Example: Governance in a Multicloud AI Deployment

A financial institution deploying generative AI assistants across AWS and Azure used DataSunrise to:

- Enforce encryption for every database and vector store.

- Automatically detect sensitive records via data discovery.

- Audit all prompt logs using activity monitoring.

- Produce monthly compliance reports aligned with GDPR Article 35 (Data Protection Impact Assessments).

This integrated approach reduced manual audit preparation time by 80% while maintaining continuous visibility into AI data use.

Ethical and Regulatory Alignment

Generative AI governance must extend beyond compliance to ethical stewardship.

Key principles include:

- Transparency: Make model provenance and decision logic visible.

- Accountability: Assign clear ownership for model behavior.

- Fairness: Monitor for bias in both data and responses.

- Explainability: Provide human-readable output justifications.

These principles align with the OECD AI Principles and form the ethical core of any enterprise governance program.

FAQ: Governance Strategies for Generative AI

Q1. What is AI governance?

AI governance establishes policies and controls to manage risk, ensure compliance, and maintain ethical AI use throughout its lifecycle.

Q2. Why is governance critical for generative AI?

Because generative systems can produce new, unpredictable content that may expose sensitive data or violate regulations.

Q3. How can organizations enforce governance automatically?

By integrating DataSunrise Compliance Manager for rule enforcement, auditing, and reporting.

Q4. Which frameworks guide AI governance?

NIST AI RMF, ISO/IEC 42001, and the EU AI Act.

Q5. What are the common pitfalls?

Over-reliance on manual oversight, unclassified data, and lack of continuous monitoring.