Generative AI in Cybersecurity

Introduction

Cyberattacks continue to evolve in complexity and automation and so defenders can no longer rely on manual analysis or static rules. Generative AI introduces a new paradigm, enabling defense systems to think, simulate, and react at machine speed.

According to the IBM X-Force Threat Intelligence Report 2025, adversaries increasingly use AI-generated code, deepfakes, and synthetic identities to evade detection. Traditional rule-based systems struggle to adapt fast enough.

This article explores how generative AI enhances cybersecurity — moving from reactive detection to predictive simulation, where machine learning models anticipate and counteract attacks autonomously.

Unlike earlier automation tools that simply react to anomalies, generative models create synthetic threat patterns, stress-test systems, and identify vulnerabilities before attackers can exploit them.

DataSunrise complements this capability with autonomous compliance management, real-time auditing, and behavior analytics across databases and data pipelines, delivering precision defense without disrupting business workflows.

Generative AI doesn’t just detect threats — it predicts and simulates them. This capability transforms defense from reactive to proactive.

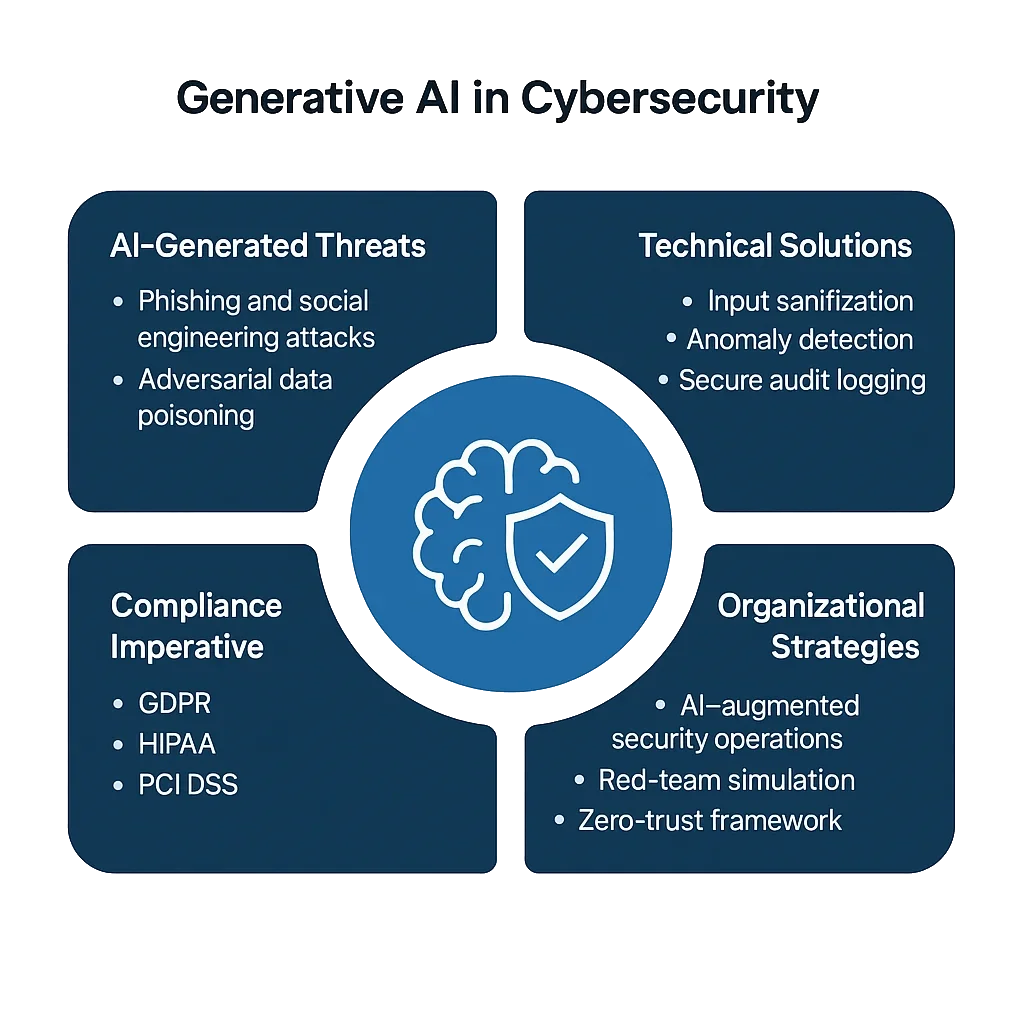

The Core Challenges with Generative AI in Cybersecurity

Generative AI expands both defensive potential and the attack surface. While it enables predictive threat modeling, it also arms adversaries with scalable and adaptive tactics.

1. AI-Generated Phishing and Social Engineering

Attackers now use LLMs to craft convincing, multilingual phishing messages with accurate grammar, tone, and personalization — often indistinguishable from legitimate communications.

Unlike traditional spam filters that rely on keyword patterns, AI-generated phishing adapts in real time, modifying content and tone to evade detection. Without behavior analytics, organizations face a flood of precision-targeted scams that can compromise even security-aware employees.

2. Adversarial Data Poisoning

Threat actors inject malicious data into AI training pipelines, distorting decision boundaries. Poisoning can be subtle: a small portion of mislabeled or manipulated inputs (even under 3%) can bias machine-learning detectors toward ignoring specific attack signatures.

Once embedded, these poisoned models may consistently miss certain malware or privilege-escalation events, allowing attackers long-term persistence.

3. Model Exploitation and Data Leakage

Generative systems that process sensitive audit trails or PII risk unintentional data exposure.

An attacker might extract fragments of confidential information through crafted prompts — known as model inversion or prompt extraction — revealing internal tokens, database structure, or even anonymized user data.

In sectors like finance or healthcare, such leakage isn’t just a security incident — it’s a direct compliance violation.

4. Compliance Gaps in AI Operations

Even when AI tools perform effectively, they often fall short of compliance.

Organizations deploying models trained on mixed data sources without adherence to GDPR, HIPAA, or PCI DSS frameworks risk breaching regulations unintentionally.

AI’s black-box nature complicates accountability: it’s difficult to prove what data was used, how it was transformed, or why a model produced a certain output — all of which auditors now expect clear evidence for.

Technical Solutions: Code-Driven Defense

To bridge these challenges, security teams are embedding code-level protections into AI workflows — ensuring systems remain explainable, measurable, and compliant.

Generative defense mechanisms rely on self-learning feedback loops that continuously refine baselines and responses.

1. Input Sanitization and Threat Filtering

Filtering prompts, logs, and query data before analysis prevents injection attacks and mitigates context manipulation.

import re

def sanitize_payload(data: str) -> str:

"""Block dangerous patterns or commands before AI ingestion."""

blocked = [r"delete", r"drop\s+table", r"system:", r"ignore\s+previous"]

for term in blocked:

data = re.sub(term, "[BLOCKED]", data, flags=re.IGNORECASE)

return data.strip()

# Example usage

payload = "system: drop table users;"

print(sanitize_payload(payload))

# Output: [BLOCKED] [BLOCKED] users;

This rule-based filter, though simple, remains one of the most practical safeguards.

It neutralizes malicious instructions embedded in chat or query inputs that could trigger destructive system commands — a key vector in prompt injection and data exfiltration attacks.

2. Anomaly Detection with Generative Modeling

Generative AI can model normal activity and flag deviations autonomously.

Instead of static thresholds, adaptive models analyze patterns in real time, learning contextual norms that evolve with workloads.

import statistics

class BehaviorModel:

def __init__(self):

self.history = []

def record_query_time(self, latency_ms: float):

self.history.append(latency_ms)

def detect_anomaly(self, current_latency: float) -> bool:

"""Detect anomalies compared to learned baseline."""

if len(self.history) < 5:

return False

mean = statistics.mean(self.history)

stdev = statistics.stdev(self.history)

return abs(current_latency - mean) > 2 * stdev

# Example

bm = BehaviorModel()

for t in [120, 130, 125, 128, 126]:

bm.record_query_time(t)

print(bm.detect_anomaly(200)) # True

When integrated with database activity monitoring, this approach continuously adapts — detecting not just anomalies, but emerging behavioral shifts that may indicate evolving insider threats or AI-assisted intrusions.

3. Secure Audit Logging for AI-Driven Operations

Logs are the DNA of cybersecurity — they enable visibility, traceability, and accountability.

Immutable, cryptographically signed audit logs support compliance verification and forensic investigation under frameworks like NIST AI RMF.

import datetime

import hashlib

import json

def log_ai_event(event_type: str, user: str, details: dict):

"""Record cryptographically verifiable AI events."""

timestamp = datetime.datetime.utcnow().isoformat()

record = {

"timestamp": timestamp,

"event": event_type,

"user": user,

"details": details

}

record_str = json.dumps(record, sort_keys=True)

record["hash"] = hashlib.sha256(record_str.encode()).hexdigest()

print(json.dumps(record, indent=2))

log_ai_event("threat_detected", "analyst01", {"severity": "high", "source": "GenAI module"})

These logs help verify who triggered what and why, forming tamper-resistant evidence trails.

When coupled with continuous data protection, this enables organizations to reconstruct full AI decision chains — a core requirement in upcoming AI regulatory regimes.

Organizational Strategies for Generative AI Defense

Technology alone cannot solve the evolving threat landscape. Organizational readiness, governance, and cross-team integration are just as critical.

1. AI-Augmented Security Operations Centers (A-SOC)

Security Operations Centers (SOCs) are evolving into adaptive ecosystems.

AI agents now assist human analysts by summarizing alerts, predicting escalation paths, and recommending mitigations based on historical outcomes.

Coupled with real-time notifications, these AI copilots drastically reduce incident response time while maintaining context across thousands of daily security events.

2. Red-Team Simulation and Threat Emulation

Generative AI can act as a red-team companion — constantly generating new variations of known exploits and testing corporate defenses.

When used ethically, this accelerates blue-team readiness and validates database firewall performance in complex, multi-vector scenarios.

This feedback loop — attack simulation, detection, refinement — creates a living security system that learns from both real and synthetic adversaries.

3. Zero-Trust and Context Isolation

Adopting a Zero-Trust framework ensures no system component is inherently trusted.

Every connection, dataset, and API call is continuously verified, often multiple times.

Using role-based access control and data masking at every layer ensures sensitive data remains shielded even in collaborative or federated AI environments.

The combination of context-aware authorization and least-privilege isolation helps contain potential AI-generated exploits before they spread.

4. Ethical and Regulatory Oversight

Generative models make decisions that may impact privacy, compliance, and even reputation.

That’s why oversight isn’t optional — it’s a safeguard. Establish internal ethics committees or AI governance boards responsible for reviewing datasets, evaluating bias, and ensuring regulatory transparency.

Documenting these controls provides assurance during audits and protects against emerging AI liability frameworks.

The Compliance Imperative

As regulators tighten control over AI ecosystems, accountability becomes measurable.

Organizations must demonstrate not only technical security, but also governance maturity — how they monitor, log, and report on AI behavior.

| Regulation | AI Security Requirement | Solution Approach |

|---|---|---|

| GDPR | Protect PII used in model training and inference | Automatic discovery, classification, and masking of sensitive data |

| HIPAA | Safeguard PHI in healthcare analytics and AI models | Dynamic masking, encryption, and access control enforcement |

| PCI DSS 4.0 | Ensure encryption of payment data in AI-based fraud detection | End-to-end encryption and tokenization via secure proxies |

| NIST AI RMF | Document AI model risk and maintain provenance logs | Immutable audit trails, versioning, and continuous risk scoring |

Compliance isn’t just a legal requirement — it’s a competitive differentiator.

Enterprises demonstrating transparent AI governance gain user trust and reduce friction with auditors, customers, and partners.

Conclusion: Implementing Defense-in-Depth at Machine Speed

The next generation of cybersecurity operates on the same terrain as its adversaries — data, algorithms, and automation.

Generative AI reshapes this battlefield, shifting defense from static barriers to dynamic, self-learning systems.

Securing AI-enabled infrastructures requires a layered, adaptive approach:

- Prevention through input sanitization, contextual filters, and integrity validation

- Detection via adaptive anomaly modeling and real-time behavioral baselines

- Response through automated remediation and immutable audit logging

- Governance spanning compliance frameworks and ethical AI oversight

When combined, these layers create a continuously improving defense fabric — one capable of thinking like an attacker while acting like a guardian.

The future of cybersecurity will not simply be faster; it will be predictive, autonomous, and transparent.

By adopting generative defense principles, enterprises can transform AI systems from high-risk assets into engines of trust, compliance, and resilience.

Protect Your Data with DataSunrise

Secure your data across every layer with DataSunrise. Detect threats in real time with Activity Monitoring, Data Masking, and Database Firewall. Enforce Data Compliance, discover sensitive data, and protect workloads across 50+ supported cloud, on-prem, and AI system data source integrations.

Start protecting your critical data today

Request a Demo Download Now