NLP, LLM & ML Data Compliance Tools for Vertica

NLP, LLM & ML Data Compliance Tools for Vertica are becoming essential as enterprises accelerate their adoption of generative AI, retrieval-augmented generation (RAG), feature engineering, and predictive analytics. Vertica frequently serves as a high-performance analytical backend for machine learning pipelines, large-scale data preparation, and AI-driven applications. However, these same workflows increase the risk of unintentionally exposing regulated or confidential information to models, prompts, and downstream consumers. As a result, organizations must adopt automated compliance tooling capable of monitoring, masking, and controlling AI-assisted access to Vertica data.

Modern AI systems introduce new exposure patterns. Large language models, autonomous agents, and machine learning workloads often generate unpredictable SQL, extract overly broad datasets, or process sensitive fields as training material. When unprotected, an LLM or ML engine may surface private information inside responses, embeddings, or derived model artifacts—leading to potential compliance violations under GDPR, HIPAA, PCI DSS, or NIST 800-53. Because Vertica does not natively include LLM-aware access controls, dynamic masking, contextual enforcement, or cross-pipeline auditing, organizations must integrate a specialized compliance layer that operates proactively before data reaches the model or pipeline layer.

DataSunrise delivers these capabilities. The platform acts as a centralized compliance gateway for Vertica by providing sensitive-data discovery, dynamic masking, SQL enforcement, and automated auditing. Together, these features form the foundation of NLP, LLM & ML Data Compliance Tools for Vertica.

Why Vertica Requires LLM-Aware Compliance Automation

AI-driven workloads introduce compliance challenges that traditional governance systems fail to address. For instance, LLM-generated SQL may unintentionally request excessive amounts of sensitive data. Additionally, ETL pipelines may extract training corpora from Vertica without validating whether the underlying fields contain PII or PHI. Meanwhile, RAG architectures often vectorize text columns—including those containing personal identifiers—into embeddings, making lineage management extremely difficult.

Furthermore, Vertica’s architecture compounds these risks. Features such as projections, ROS/WOS storage, and wide analytical schemas may distribute sensitive values across multiple physical structures. Because Vertica operates as a high-performance analytical platform for a variety of workloads—ranging from BI dashboards to ML frameworks like VerticaPy—any compliance gaps may quickly propagate across multiple teams and systems.

To avoid compliance failures, organizations require NLP, LLM & ML Data Compliance Tools for Vertica that automate:

- discovery of sensitive Vertica columns before ML training or RAG ingestion,

- dynamic masking of high-risk attributes for NLP and LLM workloads,

- SQL-context enforcement to prevent unsafe or excessive queries generated by AI,

- automated auditing of all AI-generated access to Vertica,

- monitoring to reduce the risk of LLM hallucinations that expose private values.

Consequently, without automated controls, AI pipelines can unintentionally ingest unmasked data or reveal sensitive fields during inference.

NLP, LLM & ML Data Compliance Architecture for Vertica

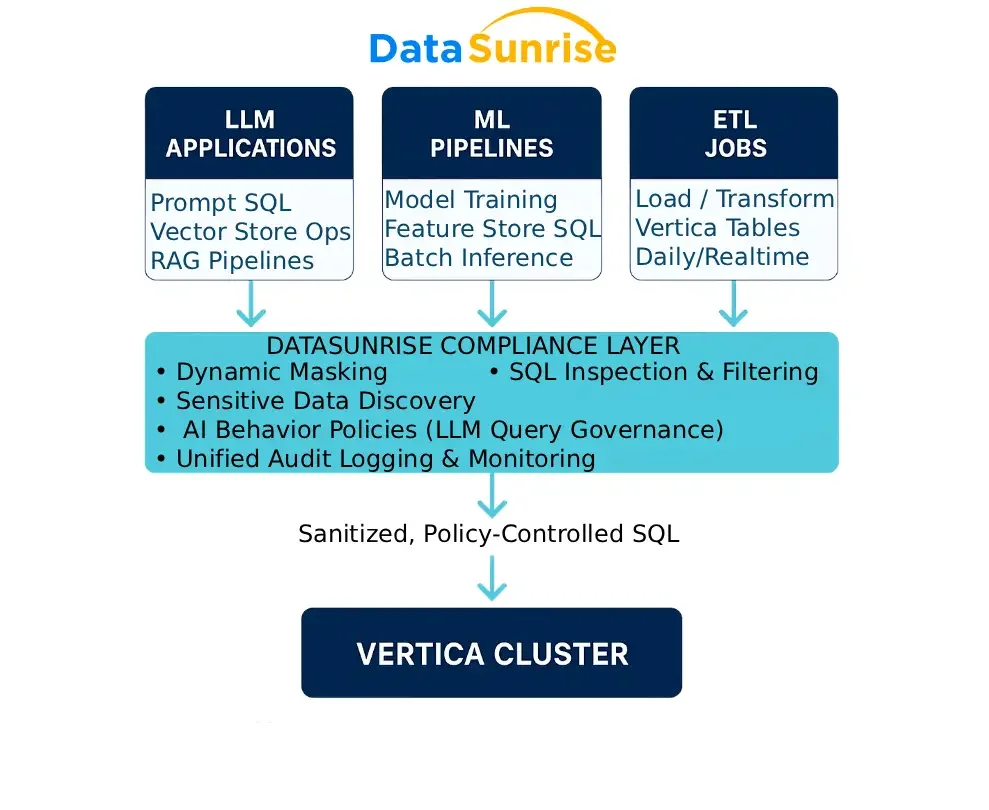

The diagram below illustrates how NLP, LLM & ML Data Compliance Tools for Vertica function as a security and transformation layer between Vertica and AI workloads. Every LLM, ML, NLP, and ETL request flows through this enforcement layer, ensuring consistent masking, auditing, and SQL inspection.

This architecture supports:

- LLM assistants generating SQL dynamically,

- RAG pipelines querying Vertica tables for retrieval,

- feature engineering processes reading sensitive columns,

- batch ML training pulling datasets directly from Vertica.

Because all enforcement occurs before Vertica data reaches AI systems, organizations retain full visibility, consistency, and governance across every NLP, LLM, and ML workflow.

Sensitive Data Discovery in Vertica AI Pipelines

Effective automation begins with discovery. NLP, LLM & ML Data Compliance Tools for Vertica must identify all sensitive fields that could affect training data, vector embeddings, prompts, or inference outputs. DataSunrise’s Sensitive Data Discovery scans Vertica tables and automatically identifies PII, PHI, financial values, authentication tokens, and free-text columns containing regulated content.

This proactive discovery mechanism prevents training datasets from being contaminated with sensitive information. Additionally, discovery results integrate directly with masking and SQL-enforcement modules, ensuring that newly detected fields automatically inherit the required compliance protections.

Dynamic Masking for NLP, LLM & ML Data Compliance Tools for Vertica

Dynamic masking is one of the core NLP, LLM & ML Data Compliance Tools for Vertica. When AI systems generate SQL, they rarely specify which columns must remain protected. Because of this unpredictability, masking must occur automatically—based on policy—not based on application logic.

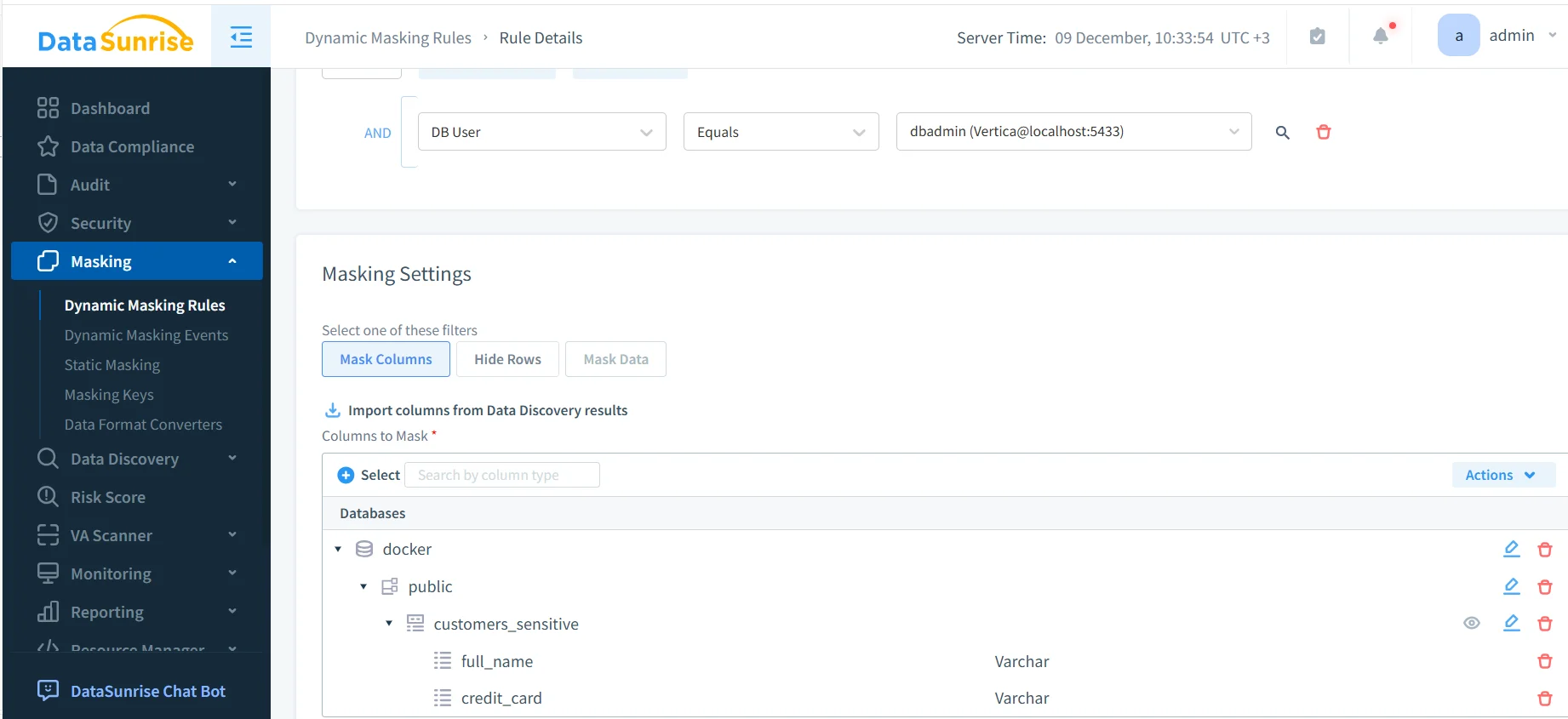

The screenshot below shows how administrators configure dynamic masking for Vertica fields frequently used by ML and NLP pipelines:

This automated masking protects sensitive attributes during:

- prompt generation for LLM applications,

- RAG-based retrieval workflows that feed vector stores,

- ETL extractions for ML feature stores,

- model training dataset construction,

- data scientist exploration inside notebooks.

Furthermore, masking prevents AI models from leaking original values inside responses, embeddings, or training artifacts—aligning with GDPR pseudonymization rules and PCI DSS requirements.

SQL Enforcement for NLP, LLM & ML Data Compliance Tools for Vertica

AI-generated SQL can introduce significant risk. LLMs frequently produce queries that include unconstrained JOINs, SELECT * scans, or schema-wide extractions. Additionally, AI agents may accidentally generate modification statements such as DROP TABLE or ALTER TABLE. To address these challenges, NLP, LLM & ML Data Compliance Tools for Vertica enforce contextual SQL rules before the query reaches Vertica.

This enforcement prevents:

- prompt-injection attacks attempting to extract sensitive or restricted tables,

- high-volume scans that expose entire datasets to an LLM,

- schema alterations triggered by autonomous agents,

- excessive data retrieval during ML feature engineering.

With enforcement automation in place, organizations gain confidence that LLM-generated SQL cannot exceed policy boundaries.

Automated Auditing for NLP, LLM & ML Data Compliance Tools for Vertica

Comprehensive auditing is essential for responsible AI governance. NLP, LLM & ML Data Compliance Tools for Vertica must provide complete insight into how AI agents, pipelines, and applications interact with sensitive Vertica data. Manual logging is insufficient because AI workloads generate thousands of queries autonomously.

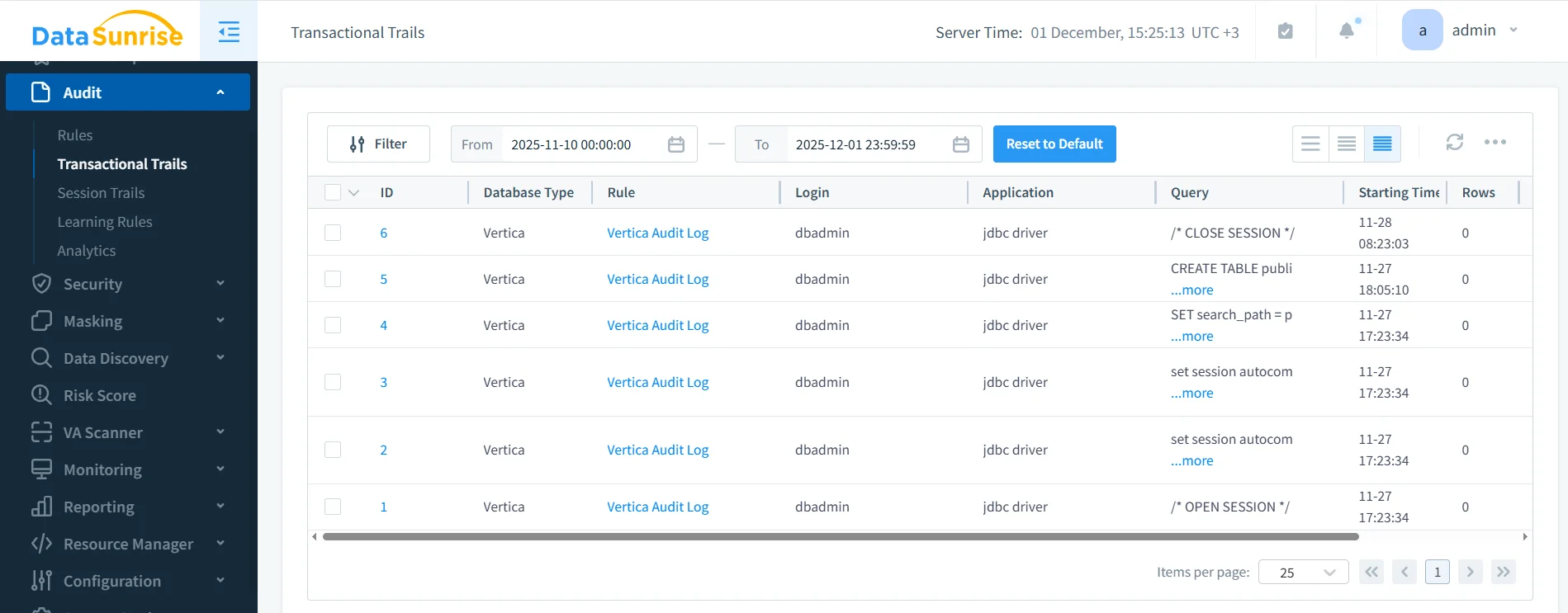

DataSunrise automatically captures SQL activity, session transitions, masking results, and rule-trigger actions. The screenshot below shows a unified audit trail suitable for both operational reviews and regulatory verification.

These logs enable compliance teams to:

- trace how an LLM-constructed dataset was generated,

- validate that sensitive fields were masked during ingestion,

- investigate anomalous or high-risk model behavior,

- produce explainability evidence for regulated AI deployments.

Because audit data is centralized, organizations maintain consistent oversight across all LLM, NLP, ETL, and ML interactions.

Comparison: Vertica vs. NLP, LLM & ML Data Compliance Tools for Vertica

| AI Compliance Requirement | Vertica Native Capability | NLP, LLM & ML Data Compliance Tools for Vertica |

|---|---|---|

| PII/PHI detection before training | Manual review | Automated Sensitive Data Discovery |

| Dynamic masking for AI queries | Not available | Real-time masking |

| LLM SQL enforcement | RBAC only | Rule-based SQL filtering |

| Centralized audit logs | Distributed logs | Unified audit trail |

| Training data lineage | Manual tracking | Automated AI-aware correlation |

Conclusion

NLP, LLM & ML Data Compliance Tools for Vertica give organizations the ability to deploy AI technologies securely and responsibly. Dynamic masking blocks the exposure of sensitive values. SQL enforcement prevents unsafe or unintended queries generated by autonomous systems. Automated auditing provides complete visibility and evidence for regulatory reviews. Together, these controls form an end-to-end compliance automation framework that protects Vertica data across all NLP, LLM, and ML workloads.

Protect Your Data with DataSunrise

Secure your data across every layer with DataSunrise. Detect threats in real time with Activity Monitoring, Data Masking, and Database Firewall. Enforce Data Compliance, discover sensitive data, and protect workloads across 50+ supported cloud, on-prem, and AI system data source integrations.

Start protecting your critical data today

Request a Demo Download Now