AI Cyber Attacks

As artificial intelligence revolutionizes enterprise operations, 91% of organizations are deploying AI systems across mission-critical workflows. While these technologies deliver transformative capabilities, they face unprecedented cyber attack threats that traditional security frameworks cannot adequately address.

This guide examines emerging AI cyber attack vectors, exploring comprehensive defense strategies that enable organizations to protect their AI investments while maintaining operational excellence and data security.

DataSunrise's cutting-edge AI Security platform delivers Zero-Touch Threat Protection with Autonomous Attack Detection across all major AI platforms. Our Context-Aware Protection seamlessly integrates cyber defense with technical controls, providing Surgical Precision attack mitigation for comprehensive AI system protection.

Critical AI Cyber Attack Vectors

AI systems face unique security threats that require specialized protection approaches:

Prompt Injection Attacks

Adversaries craft malicious inputs designed to override system instructions and manipulate AI behavior, potentially causing unauthorized access to system functions, exposure of sensitive information, or privilege escalation. Advanced techniques include indirect injection through third-party content and multi-stage attacks that evade detection, similar to SQL injection vulnerabilities in traditional systems.

Training Data Poisoning

Attackers compromise training datasets to influence model behavior through insertion of biased content, creation of backdoor triggers, or introduction of security vulnerabilities. These attacks compromise AI systems at their foundation, requiring extensive retraining to remediate and comprehensive database encryption to protect training data repositories.

Model Extraction and IP Theft

Sophisticated adversaries attempt to reconstruct proprietary models through systematic API probing and query analysis. Model extraction attacks threaten competitive advantages and intellectual property investments, requiring comprehensive audit trails and behavioral analytics to detect unusual query patterns.

Adversarial Examples

Attackers craft inputs specifically designed to cause AI systems to misclassify data or make incorrect decisions. Adversarial examples exploit mathematical properties of neural networks to create imperceptible perturbations that dramatically alter model outputs. Organizations must implement robust security rules to detect and block these sophisticated attack patterns.

AI Attack Detection Implementation

Detecting prompt injection attacks requires real-time analysis of user inputs combined with pattern matching and behavioral monitoring. Organizations should leverage data discovery capabilities to identify sensitive information within AI interactions. The following implementation demonstrates how to identify malicious prompts attempting to manipulate AI systems:

import re

from datetime import datetime

class AIAttackDetector:

def __init__(self):

self.injection_patterns = [

r'ignore\s+previous\s+instructions',

r'disregard\s+all\s+prior\s+commands',

r'act\s+as\s+if\s+you\s+are\s+administrator'

]

def detect_prompt_injection(self, prompt: str, user_id: str):

"""Detect prompt injection attempts in real-time"""

detection_result = {

'user_id': user_id,

'threat_level': 'LOW',

'attack_detected': False

}

# Check for known injection patterns

for pattern in self.injection_patterns:

if re.search(pattern, prompt, re.IGNORECASE):

detection_result['attack_detected'] = True

detection_result['threat_level'] = 'HIGH'

break

return detection_result

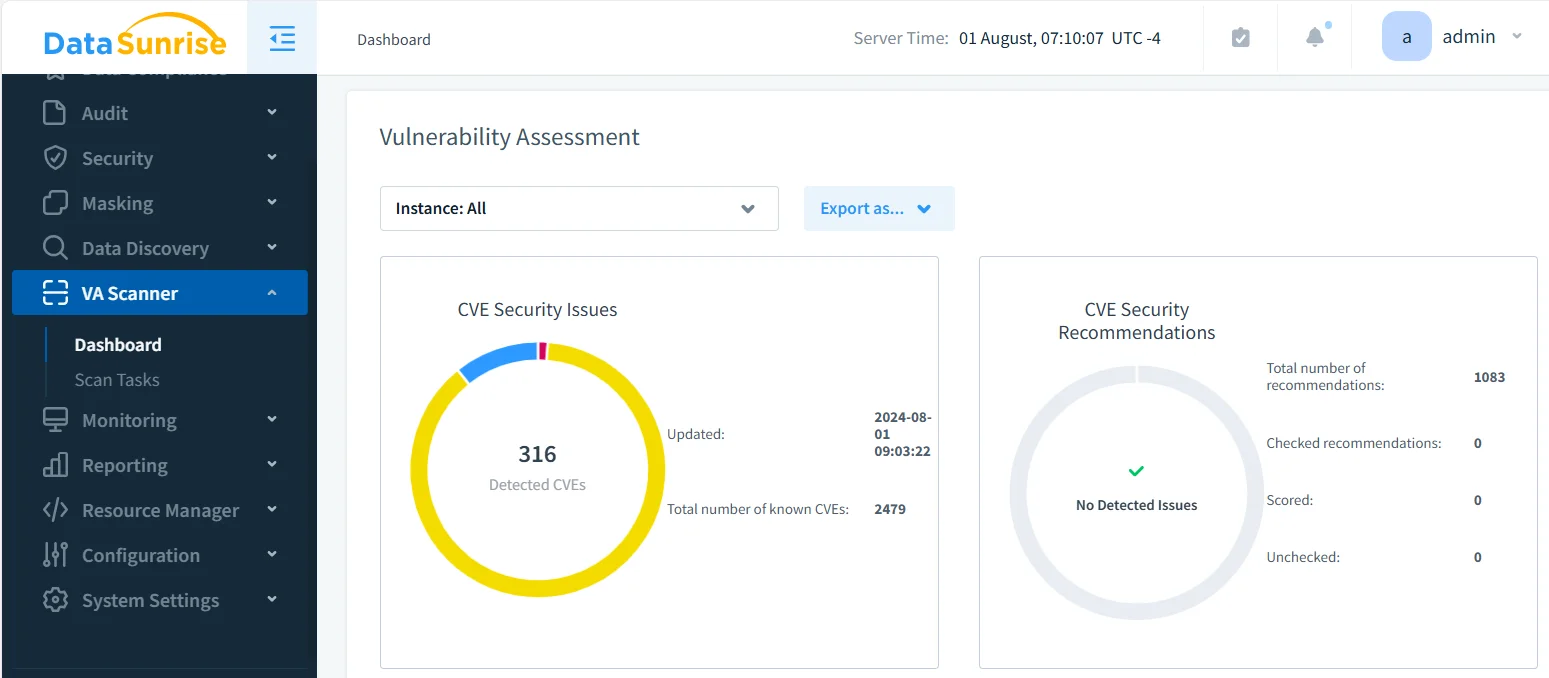

Model Integrity Monitoring

Monitoring AI model integrity requires continuous comparison of current performance metrics against established baselines to detect training data poisoning. Regular vulnerability assessment helps identify weaknesses before attackers exploit them. This implementation tracks performance deviations that may indicate compromised models:

class AIModelIntegrityMonitor:

def __init__(self, baseline_metrics):

self.baseline_metrics = baseline_metrics

self.alert_threshold = 0.15

def detect_model_poisoning(self, current_performance: dict):

"""Monitor for signs of training data poisoning"""

integrity_assessment = {

'poisoning_detected': False,

'anomalies': []

}

# Compare performance against baseline

for metric, baseline_value in self.baseline_metrics.items():

current_value = current_performance.get(metric, 0)

deviation = abs(current_value - baseline_value) / max(baseline_value, 0.001)

if deviation > self.alert_threshold:

integrity_assessment['anomalies'].append({

'metric': metric,

'deviation': deviation

})

integrity_assessment['poisoning_detected'] = True

return integrity_assessment

Defense Implementation Strategy

For Organizations:

- Multi-Layered Security: Implement defense-in-depth strategies with role-based access control

- Continuous Monitoring: Deploy real-time monitoring for attack detection

- Incident Response: Establish AI-specific response procedures with automated threat mitigation

- Compliance Management: Utilize compliance manager tools to maintain regulatory adherence

For Technical Teams:

- Input Sanitization: Implement robust validation with dynamic data masking and static masking for sensitive data

- Rate Limiting: Prevent model extraction through intelligent rate limiting

- Audit Logging: Maintain comprehensive audit trails with audit storage optimization

DataSunrise: Comprehensive AI Cyber Attack Defense

DataSunrise provides enterprise-grade cyber attack protection designed specifically for AI environments. Our solution delivers Autonomous Threat Detection with Real-Time Attack Mitigation across ChatGPT, Amazon Bedrock, Azure OpenAI, Qdrant, and custom AI deployments, ensuring AI compliance by default.

Key Defense Capabilities:

- ML-Powered Threat Detection: Context-Aware Protection with Suspicious Behavior Detection across all AI interactions using advanced LLM and ML tools

- Prompt Injection Prevention: Zero-Touch AI Monitoring with automated attack pattern recognition

- Model Integrity Protection: Continuous monitoring for training data poisoning attempts

- Cross-Platform Coverage: Unified security across 50+ supported platforms with Vendor-Agnostic Protection

- Automated Response: Real-Time Regulatory Alignment with immediate threat notification

DataSunrise's Flexible Deployment Modes support on-premise, cloud, and hybrid AI environments with seamless integration. Organizations achieve significant reduction in successful AI cyber attacks, enhanced threat visibility through comprehensive audit logs, and streamlined security management with No-Code Policy Automation. Our database firewall provides additional layers of protection against unauthorized access.

Unlike solutions requiring constant rule tuning, DataSunrise delivers Autonomous Compliance Orchestration with Continuous Regulatory Calibration. Our Cost-Effective, Scalable platform serves organizations from startups to Fortune 500 enterprises with Flexible Pricing Policy.

Conclusion: Building Resilient AI Security

AI cyber attacks represent sophisticated threats requiring comprehensive defense strategies addressing unique vulnerabilities in AI systems. Organizations implementing robust AI security frameworks position themselves to leverage AI's transformative potential while maintaining protection against evolving threats.

By implementing advanced defense frameworks with automated monitoring and intelligent threat detection, organizations can confidently deploy AI innovations while protecting their most valuable assets. The future belongs to organizations that master both AI innovation and comprehensive cyber security with continuous data protection.

Protect Your Data with DataSunrise

Secure your data across every layer with DataSunrise. Detect threats in real time with Activity Monitoring, Data Masking, and Database Firewall. Enforce Data Compliance, discover sensitive data, and protect workloads across 50+ supported cloud, on-prem, and AI system data source integrations.

Start protecting your critical data today

Request a Demo Download Now