How to Mask Sensitive Data in Vertica

How to mask sensitive data in Vertica is a critical question for organizations that rely on Vertica as a high-performance analytics platform while handling regulated or confidential information. Vertica is widely used for BI reporting, customer analytics, machine learning pipelines, and large-scale data processing. As a result, these use cases often require broad data access, which increases the risk that personally identifiable information (PII), payment data, or contact details may be exposed through queries, exports, or downstream systems.

In analytics-driven environments, traditional data protection techniques quickly become insufficient. For example, static permissions, copied tables, or manually created views struggle to keep up with changing schemas, evolving projections, and growing numbers of users. Therefore, organizations need a masking approach that operates dynamically and consistently across all Vertica workloads, without slowing down queries or forcing application changes.

How to mask sensitive data in Vertica effectively requires applying protection at query time. Instead of modifying stored data, dynamic data masking intercepts query results and replaces sensitive fields with anonymized or partially hidden values based on policy. Consequently, this approach preserves analytical usefulness while preventing unauthorized disclosure.

Why Masking Sensitive Data in Vertica Is Challenging

Vertica’s architecture prioritizes speed and scalability. It stores data in columnar ROS containers, holds recent changes in WOS, and uses projections to create multiple physical representations of the same logical table. At the same time, this design complicates data protection efforts.

Several factors make masking especially important in Vertica environments:

- Wide analytical tables often combine business metrics with sensitive attributes.

- Multiple projections may replicate sensitive columns across the cluster.

- Shared clusters serve BI tools, ETL pipelines, notebooks, and ML jobs simultaneously.

- Ad-hoc SQL queries frequently bypass curated reporting layers.

- Native role-based access control does not provide column-level redaction.

Vertica access controls decide who can query a table; however, they do not control which values appear in query results. Once a query executes, Vertica returns all selected columns in clear form. To close this gap, organizations introduce an external masking layer that understands column sensitivity and user context.

For additional background on how Vertica processes analytical workloads, see the official Vertica architecture documentation.

How Dynamic Data Masking Works with Vertica

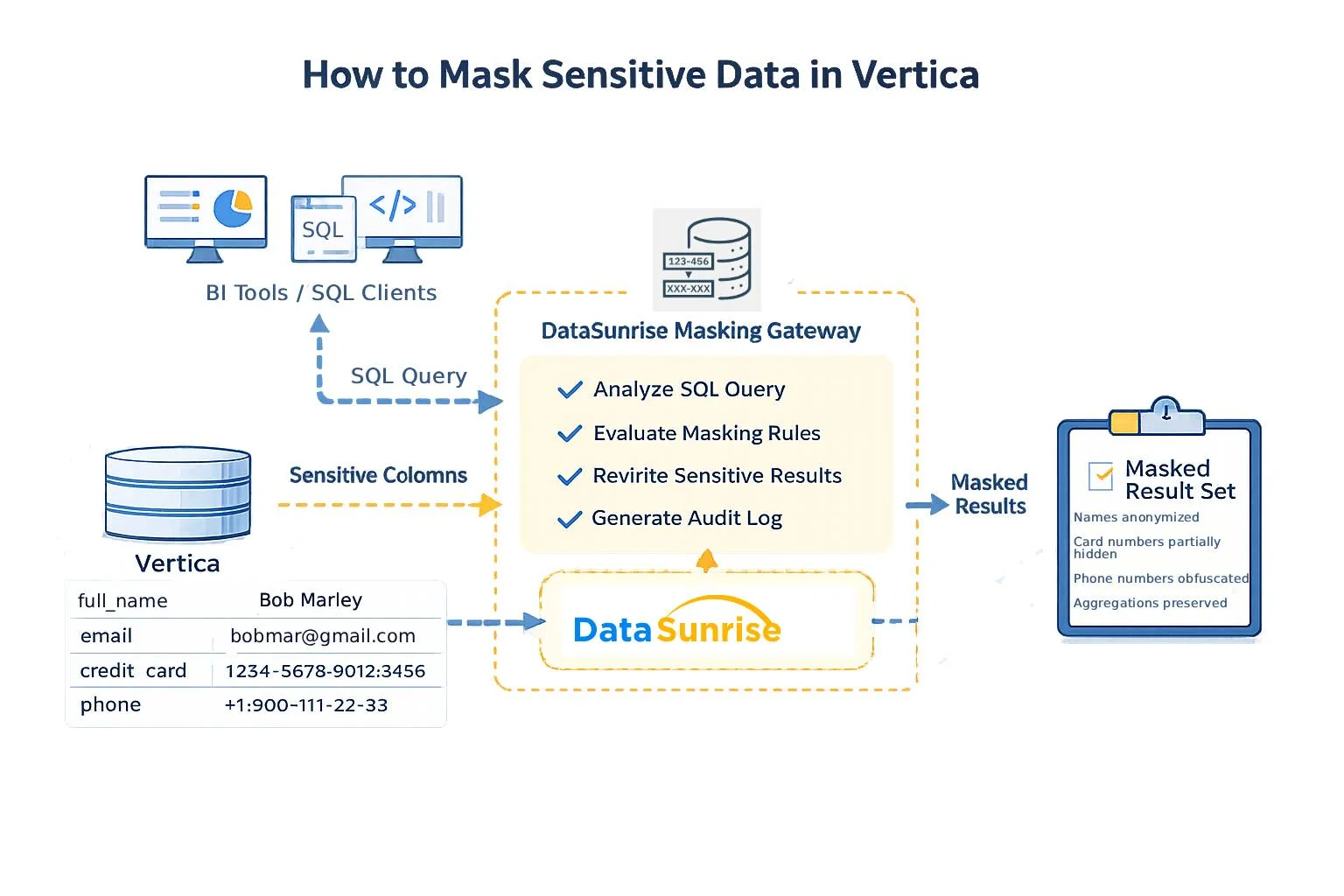

Organizations typically implement dynamic data masking in Vertica using a proxy-based model. In this setup, client applications connect to a masking gateway instead of connecting directly to the database. As a result, every SQL request passes through this gateway, where masking policies are evaluated before execution.

The masking workflow follows a consistent sequence:

- The masking engine parses and analyzes the SQL statement.

- The engine checks referenced columns against a sensitivity catalog.

- Masking rules are evaluated based on user, application, or environment.

- The gateway rewrites query results so sensitive values appear masked.

The system leaves underlying Vertica tables and projections unchanged. Because masking occurs only in the returned result set, this approach avoids data duplication and preserves query performance.

Many organizations implement this model using DataSunrise Data Compliance, which provides a centralized masking and governance layer in front of Vertica.

Architecture: How to Mask Sensitive Data in Vertica Before It Leaves the Database

The diagram below illustrates how organizations mask sensitive data before it reaches BI tools, SQL clients, or analytics applications. In practice, all requests pass through a dedicated masking gateway that enforces policies consistently.

This architecture ensures that:

- Applications continue using standard SQL without modification.

- Sensitive values never leave Vertica in clear form.

- Masking rules apply uniformly across all tools and users.

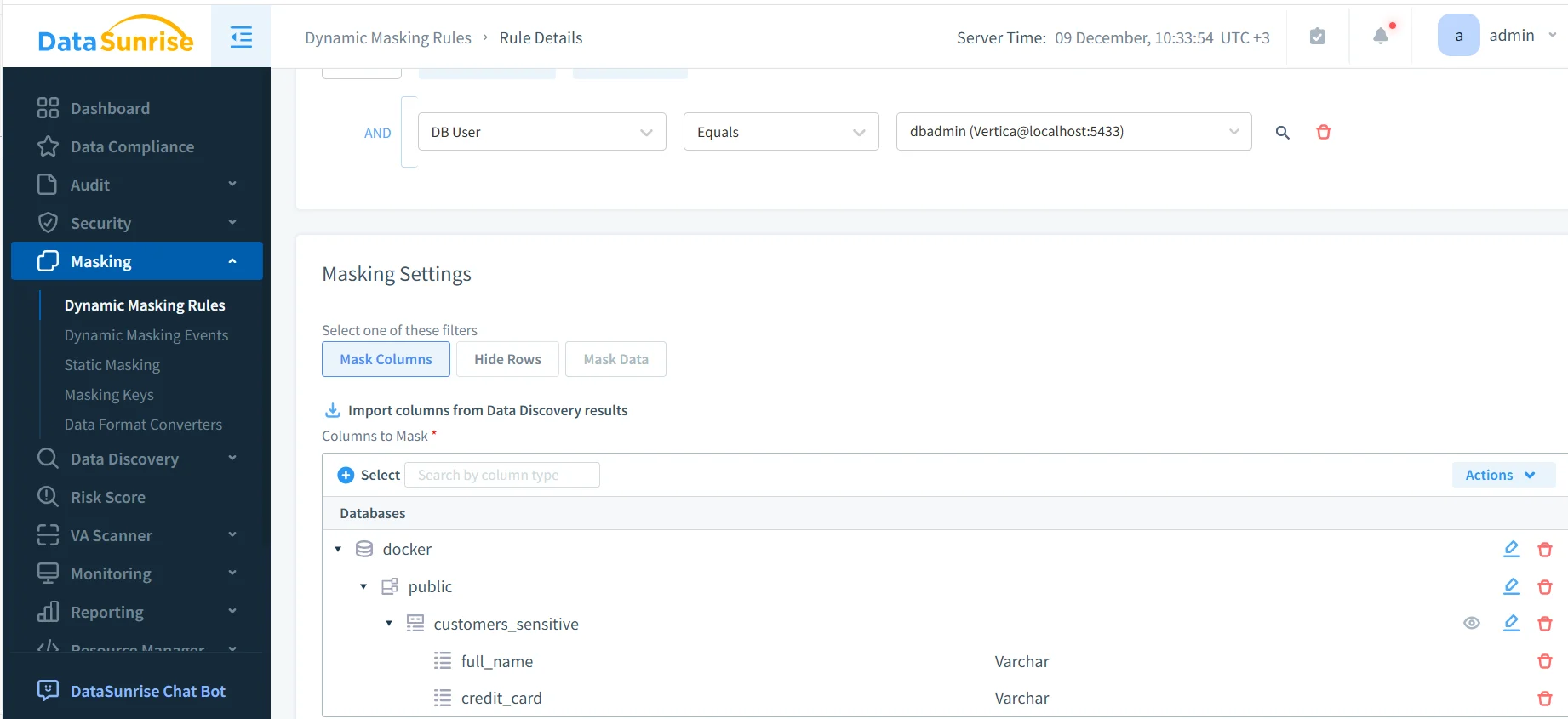

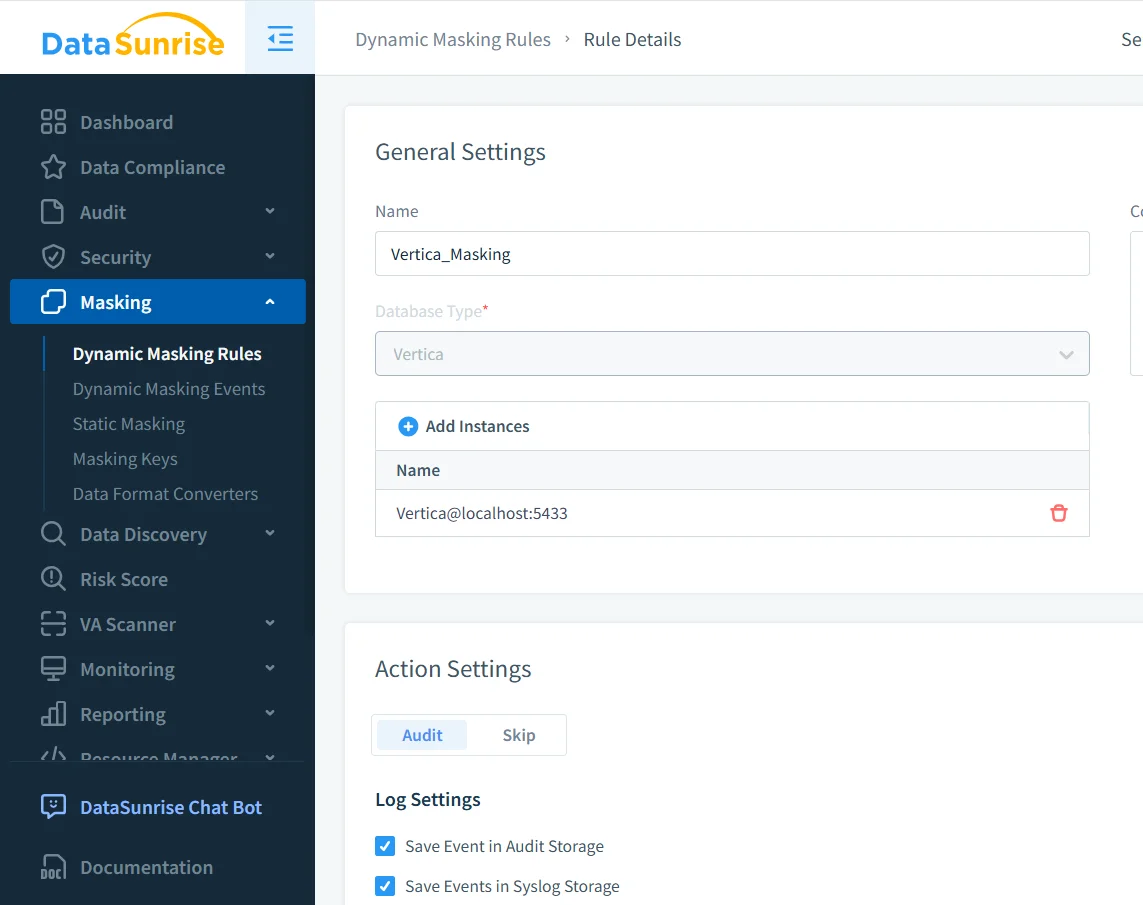

Configuring a Dynamic Masking Rule in Vertica

The first practical step in understanding how to mask sensitive data in Vertica involves defining a dynamic masking rule. This rule specifies which Vertica instance to protect, which columns are sensitive, and how masking should behave.

In this example, the administrator configures a masking rule for a Vertica database instance and applies it to a specific schema and table. Sensitive columns such as full_name and credit_card are selected explicitly. Once enabled, the rule applies automatically to every matching query.

You can import columns directly from Sensitive Data Discovery results. This approach reduces manual errors and ensures that newly created sensitive columns are masked automatically.

Administrators can refine masking rules further using conditions such as:

- Database user or role

- Client application type

- Network location or environment

Because the rule operates outside Vertica, it remains effective even as schemas evolve or projections change.

Masked Query Results in Practice

From the user’s perspective, dynamic masking does not change how queries are written. Analysts issue the same SQL statements they always have. However, the difference becomes visible in the returned values.

Without masking, query results would include real names, card numbers, or phone details. With masking enabled, non-privileged users receive anonymized or partially hidden values. At the same time, aggregations, joins, and filters continue to work correctly, so analytical workflows remain intact.

This approach aligns with data minimization and pseudonymization principles defined in GDPR and supports secure analytics under regulations such as HIPAA.

Auditing Masked Access in Vertica

Masking alone does not satisfy compliance requirements. Organizations must also demonstrate that masking was applied consistently. Therefore, dynamic masking works hand in hand with auditing.

Every masked query generates an audit record that captures:

- The database user and client application

- The executed SQL statement

- The masking rule that was applied

- The timestamp and execution context

Instead of parsing multiple Vertica system tables, compliance teams review a centralized audit trail. Consequently, investigations become faster and regulatory audits become easier. For related concepts, see Database Activity Monitoring.

Dynamic Masking Compared to Other Approaches

| Approach | Description | Limitations |

|---|---|---|

| Static masked tables | Pre-masked copies of production data | High maintenance, data duplication |

| SQL views | Masked columns exposed via views | Bypassed by ad-hoc queries |

| RBAC only | Table or schema-level permissions | No column-level protection |

| Dynamic data masking | Mask values at query time | Requires external enforcement layer |

Best Practices for Masking Sensitive Data in Vertica

- Start with discovery. Automated classification provides the foundation for effective masking.

- Centralize policies. Keep masking logic in DataSunrise rather than scattering it across SQL views.

- Test real workloads. Validate masking using actual BI and notebook queries.

- Review audits regularly. Continuous monitoring helps detect unexpected access patterns early.

- Align with security strategy. Coordinate masking with broader data security controls.

Conclusion

How to mask sensitive data in Vertica effectively comes down to applying protection at the right layer. By masking data dynamically at query time, organizations preserve the power of Vertica analytics while reducing the risk of exposing confidential information.

With a dedicated masking gateway, sensitive values remain protected across dashboards, scripts, and pipelines. As a result, analysts continue to work productively, while compliance teams gain visibility and control. This balance makes dynamic data masking a foundational capability for secure analytics in Vertica.

Protect Your Data with DataSunrise

Secure your data across every layer with DataSunrise. Detect threats in real time with Activity Monitoring, Data Masking, and Database Firewall. Enforce Data Compliance, discover sensitive data, and protect workloads across 50+ supported cloud, on-prem, and AI system data source integrations.

Start protecting your critical data today

Request a Demo Download Now