Sensitive Data Protection in Vertica

Sensitive data protection in Vertica is a foundational requirement for organizations that use the platform as a central analytics engine while processing personal, financial, or regulated information. Vertica is designed for high-throughput analytical workloads, which makes it ideal for BI reporting, customer analytics, and data science. However, that same flexibility introduces risk when sensitive values are accessed by many users, tools, and automated pipelines.

In real environments, Vertica clusters rarely serve a single workload. Instead, analysts, BI dashboards, ETL jobs, and machine learning pipelines all query the same tables. As data volumes grow and schemas evolve, traditional controls such as static permissions or manually curated views struggle to keep sensitive information protected. To address this challenge, organizations rely on dynamic, policy-driven protection mechanisms that operate in real time.

This article explains how sensitive data protection is implemented in Vertica using centralized controls, dynamic masking, and auditing, with DataSunrise acting as an external enforcement layer.

Why Sensitive Data Protection Is Complex in Vertica

Vertica’s internal architecture prioritizes analytical performance. Data is stored in columnar ROS containers, recent updates are maintained in WOS, and projections create multiple optimized physical layouts of the same logical data. While this design accelerates queries, it also makes it difficult to track and protect sensitive attributes consistently.

Several operational realities increase exposure risk:

- Wide analytical tables often mix business metrics with PII or payment data.

- Multiple projections replicate sensitive columns across the cluster.

- Shared environments allow both trusted and semi-trusted users to query the same datasets.

- Ad-hoc SQL queries bypass predefined reporting or governance layers.

- Native role-based access control does not redact values at the column level.

As a result, Vertica can return sensitive values in clear text as soon as a user has SELECT access. To reduce this risk, organizations introduce protection mechanisms that evaluate queries and transform results before data reaches the client.

For architectural background, see the official Vertica architecture documentation.

Centralized Sensitive Data Protection Architecture

A common approach to protecting sensitive data in Vertica is to separate enforcement from storage. In this model, client applications connect through a centralized security gateway rather than directly to Vertica. Every SQL query is inspected before execution, and protection policies are applied consistently.

Many organizations implement this architecture using DataSunrise Data Compliance. DataSunrise operates as a transparent proxy in front of Vertica, enforcing protection rules without changing database schemas or application logic.

This centralized layer enables:

- Automatic identification of sensitive columns.

- Real-time masking of protected values.

- Consistent enforcement across BI tools, scripts, and services.

- Unified audit logging for compliance evidence.

From an operational standpoint, this architecture also simplifies long-term maintenance. Instead of embedding protection logic inside dozens of SQL views, ETL scripts, or BI dashboards, teams manage policies in a single location. When compliance requirements change, administrators update rules centrally and apply them instantly across all Vertica workloads.

Moreover, this separation of duties aligns well with modern security models. Database administrators continue managing performance, projections, and storage, while security and compliance teams control masking, auditing, and access behavior. This clear boundary reduces operational friction and minimizes the risk of accidental misconfiguration.

Dynamic Masking as the Core Protection Mechanism

Dynamic data masking is one of the most effective techniques for sensitive data protection in Vertica. Instead of altering stored data, masking is applied at query time. When a user or application requests data, sensitive values are replaced with anonymized or partially hidden representations in the result set.

DataSunrise provides built-in dynamic data masking that evaluates each query against policy rules. These rules can consider:

- The database user or role.

- The client application type.

- The environment (production, staging, analytics).

- The sensitivity classification of each column.

The underlying Vertica tables remain unchanged, which preserves performance and avoids data duplication. At the same time, sensitive values never leave the database boundary in clear form.

Another important advantage of dynamic masking is its ability to preserve analytical accuracy. Unlike static masking or redaction during ingestion, calculations and aggregations continue to operate on real values internally. Masked representations apply only at the presentation layer.

This distinction is especially important in Vertica environments, where analytical correctness and performance are tightly coupled. Business metrics, trend analysis, and machine learning feature extraction remain reliable while sensitive attributes stay protected.

Configuring Sensitive Data Protection Rules

Protection rules define how sensitive data should be handled. A typical rule targets a specific Vertica instance, selects one or more schemas or tables, and identifies which columns require protection.

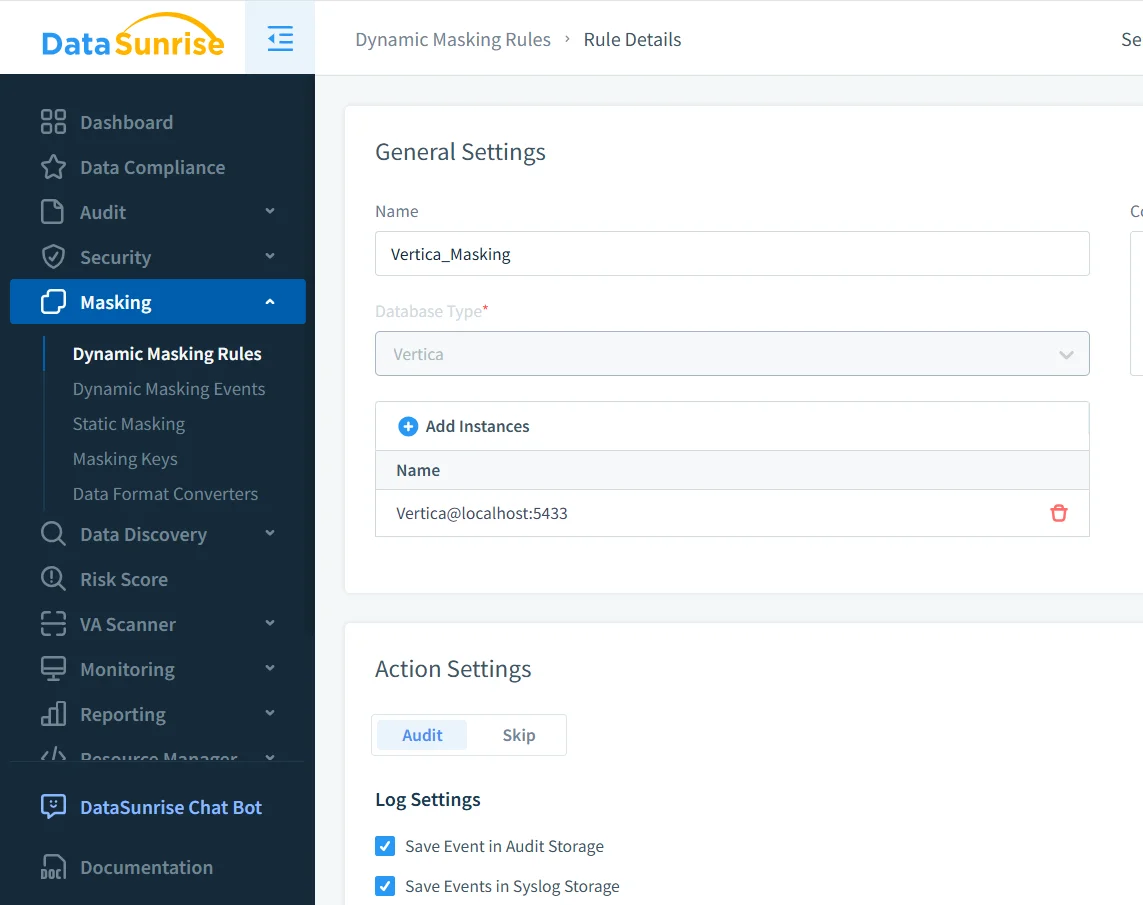

Dynamic masking rule setup for a Vertica database instance.

At this stage, administrators associate the Vertica instance, define masking behavior, and enable auditing. Because rules live outside Vertica, they remain effective even as schemas and projections evolve.

When onboarding new Vertica tables, run discovery scans first and link masking rules to Sensitive Data Discovery results. This prevents newly added sensitive columns from being exposed by default.

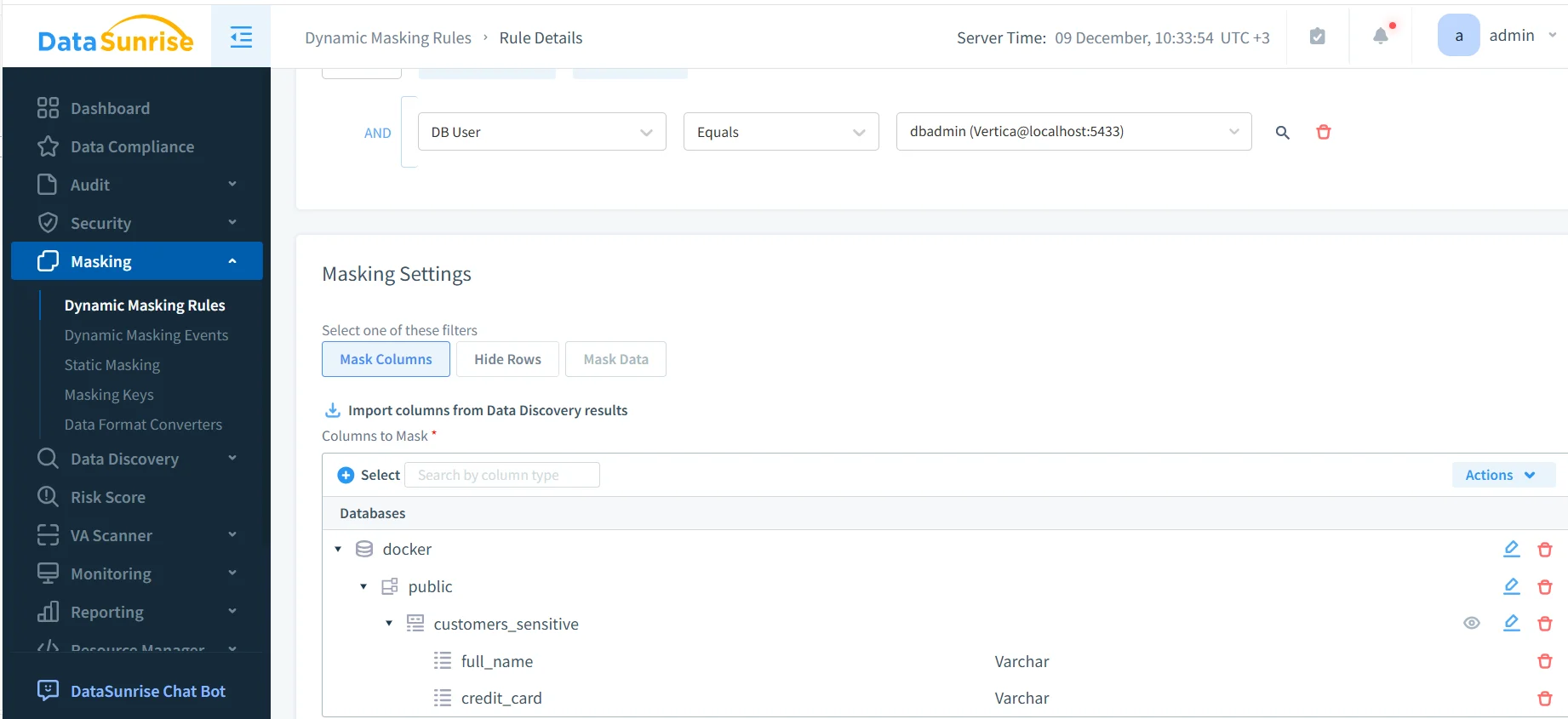

Once the rule exists, administrators select which columns require protection. Column lists are often imported directly from discovery results.

Selecting sensitive columns such as names and payment data for masking.

This discovery-driven approach significantly reduces blind spots and manual effort.

Masked Results in Analytical Workflows

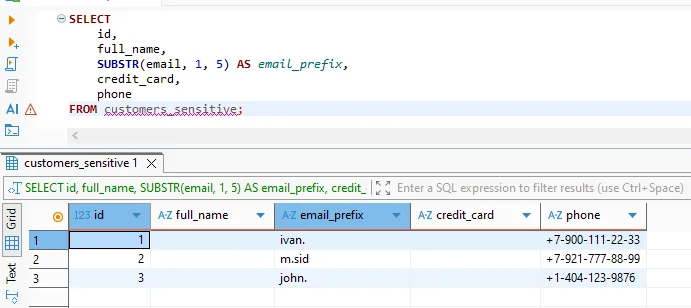

From the perspective of analysts and applications, sensitive data protection is transparent. Queries are written using standard SQL, and Vertica executes them normally. The difference appears only in the returned values.

Masked results still support joins, filters, aggregations, and groupings. This makes dynamic masking suitable for BI dashboards, exploratory analysis, and feature engineering workflows.

Because protection applies uniformly, teams avoid maintaining separate “safe” tables or rewriting reports. Instead, policies follow the user and context, not the query.

In addition, dynamic masking supports collaborative analytics. Multiple teams can safely query the same Vertica tables with different visibility levels, enabling broader data access without compromising confidentiality.

Auditing and Visibility for Sensitive Data Access

Protection without visibility is insufficient for compliance. Organizations must demonstrate that sensitive data was protected consistently and that access was monitored.

Audit trail showing masked query execution and rule enforcement.

DataSunrise automatically records audit events for every protected query. These records include:

- The database user and client application.

- The executed SQL statement.

- The protection rule that was triggered.

- The execution timestamp and context.

These audit logs integrate with Database Activity Monitoring and can be forwarded to SIEM platforms for long-term retention.

Auditing also plays a critical role in incident response. When unusual access patterns appear, compliance teams can quickly determine whether masked data was exposed, which rules applied, and which applications initiated the queries.

Protection Techniques Compared

| Technique | Description | Suitability for Vertica |

|---|---|---|

| Static masked copies | Create separate masked datasets | High maintenance, not scalable |

| SQL views | Expose masked columns via views | Easily bypassed by direct queries |

| RBAC only | Restrict access at table level | No value-level protection |

| Dynamic masking | Rewrite results at query time | Centralized and scalable |

Best Practices for Sensitive Data Protection in Vertica

- Begin with automated discovery to identify sensitive fields.

- Apply protection at the query layer instead of copying data.

- Test policies using real BI and analytics workloads.

- Review audit logs regularly for unexpected access patterns.

- Align masking rules with broader data security strategies.

Conclusion

Sensitive data protection in Vertica requires controls that match the scale and flexibility of analytical workloads. Dynamic masking, centralized enforcement, and unified auditing allow organizations to protect regulated information without sacrificing performance or usability.

By deploying a dedicated protection layer with DataSunrise, teams gain consistent safeguards across dashboards, scripts, and pipelines. As data volumes and user access grow, this approach ensures that sensitive information remains protected while Vertica continues to deliver high-performance analytics.

Protect Your Data with DataSunrise

Secure your data across every layer with DataSunrise. Detect threats in real time with Activity Monitoring, Data Masking, and Database Firewall. Enforce Data Compliance, discover sensitive data, and protect workloads across 50+ supported cloud, on-prem, and AI system data source integrations.

Start protecting your critical data today

Request a Demo Download Now